Green Car Report :Could Free Piston Range Extenders Broaden the Electric Truck Horizon?

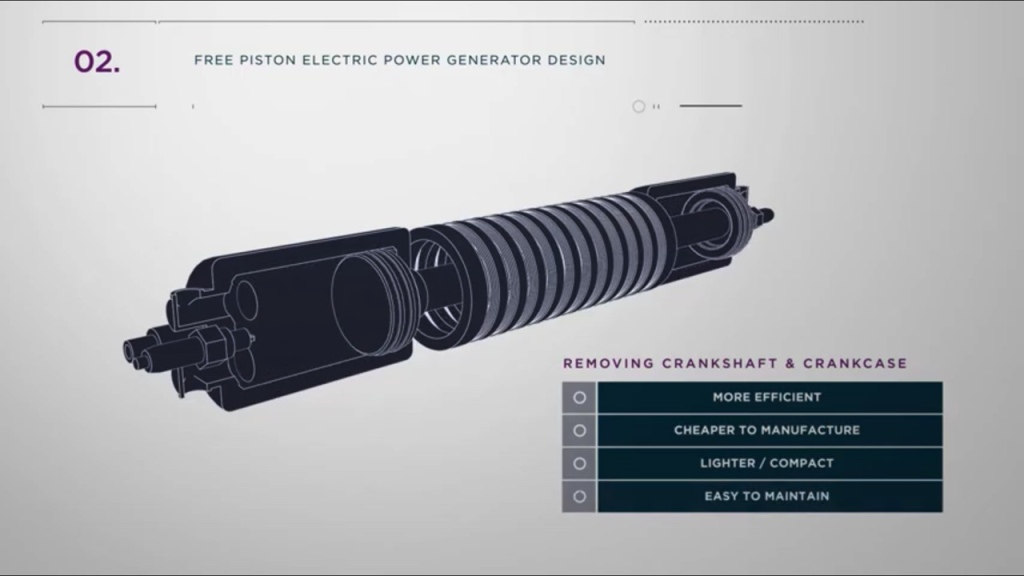

Not so much for the wings + Wind Turbines + Solar Cells but because it is not one hull but Seven (7!) each one of those sub sections is essentially a barge with a rugged locking mechanism that creates a rigid sea going hull once engaged. This way the crew and propulsion section can drop off and pick up sections either all or one or two as they make their rounds and the expensive bit gets much more use as well as being smaller and less expensive, probably safer as well.

Money Quote:

There are several lessons here. The most politically salient is that in manufacturing, as in cooking, it is possible to “lose the recipe.” And with an accelerating pace of technological progress, it is possible to lose it in an alarmingly short span of time. This is perhaps the strongest argument for some form of industrial policy or trade protection: the recognition that the national value of manufacturing often lies not so much in the end product itself, but in the accumulated knowledge that goes into it, and the possibility of old processes and knowledge sparking new innovation. Of course, innovation is itself what killed the high-end cassette player. But many otherwise viable industries have struggled under the free-trade regime.

The fact is that technology is not embodied in a drawing or set of drawings or any set of instructions. It is embodied in human knowledge. One of the key problems in the industry is the loss of control a customer or prime has when they let a contractor develop the ‘data package’ and ‘product’ with no significant oversight. While the customer or prime may ‘own’ the IP because they paid for it, the fact is that the majority of the capability is embodied in the people and culture of the contractor not in any set of information.

The Hellenic world had machines as complex as early clocks and steam engines of a sort but lost the recipe in a few generations or less. Various complex building skills and wooden machines, metalworking and early chemistry were discovered then lost again and again because the data package was in human brains and examples. This is why the printing press and its ilk were so incredibly important to technological lift off. Along with a culture of progress and invention.

We are far ahead of that world but as above, not above losing the recipe of a complex technology. This is one of the drivers behind Computer Aided Design, Analysis, Documentation, Fabrication. Our cybernetic tools have the ability to record the data package in detail at least for certain classes of things so that we should be able to maintain the ability to replicate things. Making special, small run, even one off technological objects rational rather than nutty.

But at the same time I think that it is likely that the artisanal ethos and products will remain relevant and even increase in value as people shift away from a mind/economy/culture of scarcity to at least sufficiency and if we survive and expand into the universe eventually richness. These transitions will be extremely difficult because they are at odds with many tens of thousands of years of genetic/mimetic coding of our behaviors based on small group hunter gatherers and kin group bonding. Those transition will be enabled by machines that fabricate, even machines that invent. What will happen when humans loose the recipe for technological advancement, because too few engage in the complex enterprise of development??? Is that the point of the Rise of the Machine???

BY BRYAN PRESTON FEB 17, 2021

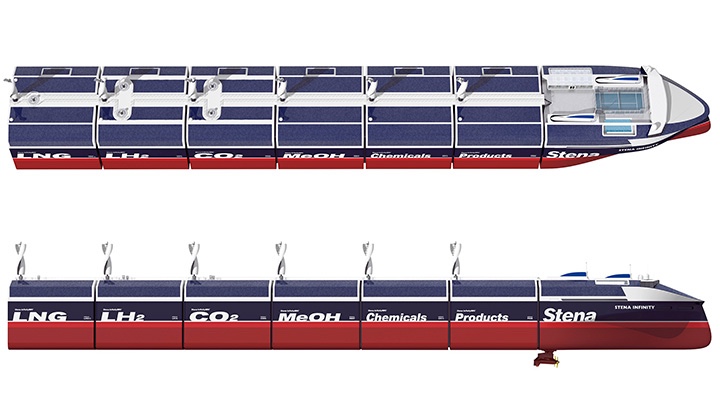

From StreetWiseProfessor: Who Is To Blame for SWP’s (and Texas’s) Forced Outage? “The facts are fairly straightforward. In the face of record demand (reflected in a crazy spike in heating degree days)…

…supply crashed. Supply from all sources. Wind, but also thermal (gas, nuclear, and coal). About 25GW of thermal capacity was offline, due to a variety of weather-related factors. These included most notably steep declines in natural gas production due to well freeze-offs and temperature-related outages of gas processing plants which combined to turn gas powered units into energy limited, rather than capacity limited, resources. They also included frozen instrumentation, water issues, and so on.”

So then Krugman rolls in from the NYT saying ‘Texas’ problem was Windmills is a Lie. ‘ Which itself, while not a lie in Detail, is a lie in Essence. As per some top line thinking in ManhattanContrarian in This Piece Points out:

Total winter generation capacity for the state is about 83 GW, while peak winter usage is about 57 GW. That’s a margin of over 45% of capacity over peak usage. In a fossil-fuel-only or fossil-fuel-plus-nuclear system, where all sources of power are dispatchable, a margin of 20% would be considered normal, and 30% would be luxurious. This margin is well more than that. How could that not be sufficient?

The answer is that Texas has gone crazy for wind. About 30 GW of the 83 GW of capacity are wind.

ManhattanContrarian

….sometimes the wind turbines only generate at a rate of 600 MW — which is about 2% of their capacity. And you never know when that’s going to be.

But/And it IS complicated. 1) You can install deicing systems on windmills but they are expensive to install and maintain and require INPUT of electric power to operate (Texas average weather makes this uneconomic to install.) 2) Texas did this to itself, it has an independent Grid because it IS a country sized state, the grid operator is actually a Bit Wind Crazy…why…because Texas has a lot of wind power. 3) This weather is a Combination of once in a hundred year cold AND snow/cloud cover, which systems are not designed to deal with other than in some degraded manner.

So one can only hope that because it is complicated and is fairly easily shown to be so that the cool heads will be left to work out some solution that prevents this sort of thing happening again. Because yes weather is unpredictable and while this was a 1/100 double header, it did occur and that says that the odds may not be what we think they are and so some mitigation is required. That mitigation is Not more wind, Not stored power, it IS more nuclear +better of all the above, AND better links to the broader national grid, etc, etc.

Myself, I’m planning a new house in the country. Big propane tank, backup generator, solar power, grid tied battery backup, ultra insulated house (for the region.) My prediction is that the grid is going to get worse not better and if you you can, you need to be able to survive without electric power from the mains for a week or more. I can make that possible, though I am in the few percent just because of location, situation, grace of the Infinite.

Axiom is not as famous as SpaceX or BlueOrigin, even Boeing or NG but it is setting up to be a big noise in commercial space. “Axiom Space, Inc., which is developing the world’s first commercial space station, has raised $130M in Series B funding”

In January 2020, NASA selected Axiom to begin attaching its own space station modules to the International Space Station (ISS) as early as 2024, marking the company as a primary driver of NASA’s broad strategy to commercialize LEO. While in its assembly phase, Axiom Station will increase the current usable and habitable volume on ISS and provide expanded research opportunities. By late 2028, Axiom Station will be ready to detach when the ISS is decommissioned and operate independently as its privately owned successor.

From the above ParabolicArc article.

But they are already in the ride share business, setting up launches of multiple smaller missions on one booster, Axiom buying the ride then working with the launch customers to integrate their satellites on the mission bus. Another recent milestone:

Lots of cSpace development, keep it coming…

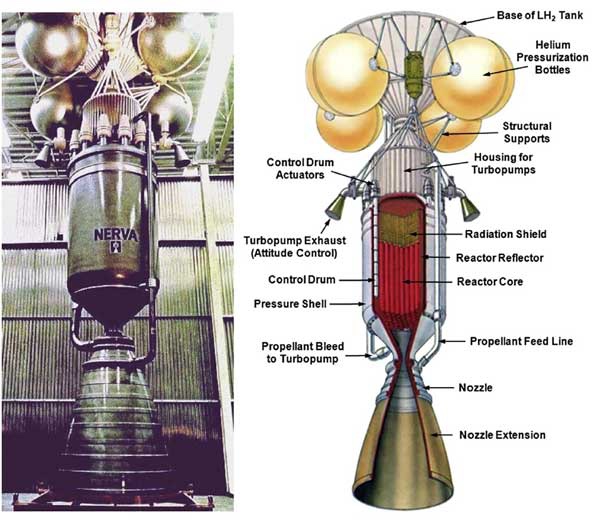

While a chemically powered trip to Mars is feasible given the ability to lift a lot of mass so orbit, See SpaceX-Elon Musk, this is probably not the solution you would go for first. I think it makes sense as part of the Vision Setting that Musk does but the preference has always been for nuclear propulsion it enables faster (safer) trips and makes reusability even more effective since the ‘shuttles’ are not spending many months in transit each way.

Posit a Freighter something like the illustration below. Departing Mars having dropped of say 2, 3, 4 starships’ worth of cargo. MarsStarships shuttle up and down and provide point to point transport on Mars. EarthStarships shuttle cargo up to earth orbit. Maybe LunarStarships shuttle fuel from production stations on the Moon to reduce the cost of fuel for the starships and the Freighter.

Now you have a system that provides Access to the solar system with significant cargos and the ability to establish and support exploration stations wherever you go.

Seems like there must have been a mash up of astrophysics/cosmology/cybernetics a couple of weeks ago there have been a series of articles about computers and the universe. One series pointing out that once could conceive of using the AGB stars in their ‘dusting mode’ (above) as a computing engine.

But on the other side there have been a couple of articles that touch on the metaphysical (philosophical basis of reality) concept that we and our universe, are one vast simulation.

…Oxford philosopher Nick Bostrom’s philosophical thought experiment that the universe is a computer simulation. If that were true, then fundamental physical laws should reveal that the universe consists of individual chunks of space-time, like pixels in a video game. “If we live in a simulation, our world has to be discrete,”….

From: New machine learning theory raises questions about nature of science

….a discrete field theory, which views the universe as composed of individual bits and differs from the theories that people normally create. While scientists typically devise overarching concepts of how the physical world behaves, computers just assemble a collection of data points…..

From: New machine learning theory raises questions about nature of science

…A novel computer algorithm, or set of rules, that accurately predicts the orbits of planets in the solar system….

… devised by a scientist at the U.S. Department of Energy’s (DOE) Princeton Plasma Physics Laboratory (PPPL), applies machine learning, the form of artificial intelligence (AI) that learns from experience, to develop the predictions.

Qin (pronounced Chin) created a computer program into which he fed data from past observations of the orbits of Mercury, Venus, Earth, Mars, Jupiter, and the dwarf planet Ceres. This program, along with an additional program known as a ‘serving algorithm,’ then made accurate predictions of the orbits of other planets in the solar system without using Newton’s laws of motion and gravitation. “Essentially, I bypassed all the fundamental ingredients of physics. I go directly from data to data,” Qin said. “There is no law of physics in the middle…

…”Usually in physics, you make observations, create a theory based on those observations, and then use that theory to predict new observations,” said PPPL physicist Hong Qin, author of a paper detailing the concept in Scientific Reports. “What I’m doing is replacing this process with a type of black box that can produce accurate predictions without using a traditional theory or law.”…

From: New machine learning theory raises questions about nature of science

Ok so now I am going to go a bit sideways and you may want to just go on about your internet day. But while I laude Qin and his team I have a bit of an issue with what he claims re the basis is Philosophy. Not the claim that the discrete field theory sparked his concept exploration. But that the actual system he developed has anything to say about that metaphysical theory.

Taking nothing away from the team what I see seems like a straightforward application of machine learning. In fact a relatively simple one though I would laude the whole idea of applying it to physics in general. A very interesting though, like many interesting insights, oddly obvious is retrospect. (Sorry for the repeated Though clauses…I absolutely see this as fascinating insight…and possibly extremely important…it just seems like D’oh in retrospect.)

As physics is very much aligned with mathematics (I think because the discovery of each was feedback on the other) and mathematics and cybernetics are also deeply intwined it should come as no surprise that computer systems designed to create black box solutions, when fed the right kind of data, will create a black box model of physical phenomena.

The output of science are tools that allow us to predict finite things about the universe we live in, repeatably and accurately. These tools are often used by engineers to enable technologyy that make life better for everyone.

But in many ways this is an engineers (relatively narrow) viewpoint. To some large degree an engineer does not care why the tool works, only that it does and how accurately. Counter to that, a strength of the theory based + mathematical model approach is that it gives you a tool to link the rest of reality to the ‘discrete’ piece you are working on right now. A jumping off point or a linking point to other theories that allows us to move onto other problems and link the

And/But (you knew it was coming) i wonder if this has anything to do with discrete field theory per se. Maybe if the learning algorithm used had that in it this would show something of that nature, but otherwise I do not see this as showing anything in particular other than the ability of learning systems which are in some sense continuous not discrete systems to develop predictive models directly from the data (as Qin says) rather than through the labor intensive methods of theory extraction and proof that has been the basis for scientific exploration since it first evolved in the Middle Ages.

Again BUT, it has been getting harder to develop these ‘deep’ theories. Look at the colliders and other tools that physicists use these days to probe the depths of our reality. In this world there are many things, like Qin’s next test with Nuclear Fusion, where an engineering model might be much more valuable than a ‘theory of this’ if it can be captured and used in a fraction of the time.

It’s all good, fascinating, wonderful…but let’s not get ahead of ourselves.

Wow